Note: In case this is not clear, this blog post reflects my own opinion and experience, and is not an official statement on behalf of my employer.

Since I started working at Mozilla headquarters, my job interview volume has drastically increased -- both in person and phone. As I had rarely conducted any job interviews before that, it was as much a learning experience for me as it was a critical part of their job search for the applicants. Initially, I was perhaps just as nervous as the interviewee.

The experience was mixed: Sometimes, candidates come up with surprising answers, elegant solutions to simple problems -- or even just show that they have grasped a problem and its solutions from beginning to end.

Perhaps just as often, however, the experience is also increasingly frustrating. One of the worst things to notice is that the candidate can't code. Seriously? Yes, seriously. Given a relatively simple programming question, they fail to come up with a solution either at all, or worse, they produce a solution that reveals shocking gaps in their knowledge of basic algorithms, understanding of code, or other qualities you would hope for in someone who makes software for a living and claims to have done so for awhile.

Another thing that disappoints me in candidates is when it turns out, they don't have professional goals. Sure, you can't be prepared for every question in the world. But if you are looking for a job, yet you are unsure where you want to go if you do get the position, how can this possibly convince me that you are the right person for the job?

For our web development team, this makes me wonder: Do people consider "web" in front of "developer" to be a weakening qualifier? Or more generally, does software engineering have a secret reputation of not being a real profession, with real professional requirements?

Mozilla is perhaps one of the fewer companies without a strict degree requirement: Some of our brightest minds have no formal college education, yet are incredibly successful and valued members of our community. The reason why this works out is that the Mozilla project, with many more volunteers than employees, is a meritocracy: If you do awesome things, people will respect you and turn to you for more awesomeness. If you aren't doing a good job, your impact on the community will be minute (and stay that way).

Maybe, though, this is sometimes mistaken as an excuse not to be really, really good. It's not about knowing everything there is about writing software. But if they are applying for one of the best positions in the industry, where they have to earn their respect rather than show off their formal credentials, shouldn't they at least try a little harder?

If I could give a few tips to applicants across the Web industry, to perhaps raise their chances of getting a job, and to improve the interview experience for both interviewer and interviewee, I would say:

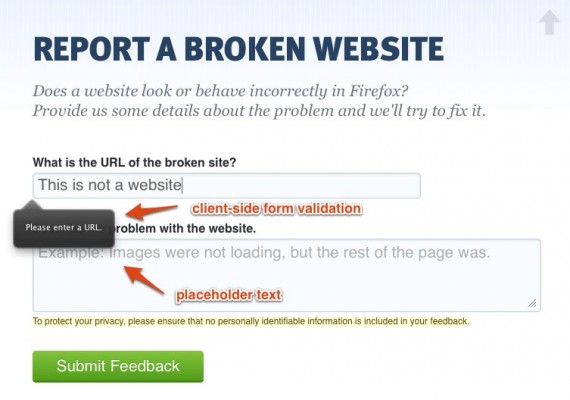

- Know your basics. There is a reason they teach algorithms and data structures very, very early in college. And complexity and logic. The works. Even more so, know your Web. If you can't explain the nature of an HTTP request, how can you know your own applications by heart? If you don't know how to secure a web application, how can you protect the privacy of your users?

- Know why you're awesome. When an interviewer goes home that day, they want to feel excited about hiring you. Give them a reason. That's no invitation to be snotty -- but one to not hold back on exciting things there are to know about you professionally.

What's your experience with interviewing and hiring people? What are the things you want to see in an applicant? And what have you done to find the people who are right for you? I am interested in hearing your opinions in the comments.

Read more…