Since I released my first version of UpShot, the Python-based automatic screenshot uploader, last week, I have received plenty of good feedback (for example on Hacker News), encouraging comments (like here on my blog), and even quite a few Issues were filed on Github.

Meet UpShot, Screenshot Sharing via Dropbox on OS X

Ways to share screenshots on the Internet are a dime a dozen.

HTML5-Compatible Blink Tag

We all know that the <blink> tag was always a bad idea. It's not part of any HTML standard, it's not semantic, and it is so horrible for accessibility and user experience that Jakob Nielsen called it "plain evil".

Bye bye Wordpress, Welcome Jekyll!

Feel free to blame it on @cupofT's excellent homebrew that I happened to be drinking the other day, but I decided it was time to blow the thick layer of dust off my blog that it had accumulated over the years.

Really, really empty the OS X Trash

After updating software on OS X, it drops the previous version into the Trash, to be thrown out the next time you empty it.

A small script to install or update MakeMKV on Linux

Installing makemkv on Linux isn't so hard, but it's also a bit tedious, so here's a little script that'll do it for you. Tested on Ubuntu.

Fail-clown?

The BBC website is currently down, and, not to be outdone by the rest of the Internet, they're trying their own interpretation of a "fail pet", a "fail clown":

I don't know about you, but I think that thing is kind of creepy. Aren't fail pets supposed to make you feel warm and fuzzy while you're waiting for the site to come back? This one screams "make it stop!"... strange.

Thanks for the hint, nigelb!

Fail Pets Research in UX Magazine

I totally forgot blogging about this!

Remember how I curate a collection of fail pets across the Interwebs? Sean Rintel is a researcher at the University of Queensland in Australia and has put some thought into the UX implications of whimsical error messages, published in his article: The Evolution of Fail Pets: Strategic Whimsy and Brand Awareness in Error Messages in UX Magazine.

In his article, Rintel attributes me with coining the term "fail pet".

Attentive readers may also notice that Mozilla's strategy of (rightly) attributing Adobe Flash's crashes with Flash itself by putting a "sad brick" in place worked formidably: Rintel (just like most users, I am sure) assumes this message comes from Adobe, not Mozilla:

Thanks, Sean, for the mention, and I hope you all enjoy his article.

Let's talk about password storage

Note: This is a cross-post of an article I published on the Mozilla Webdev blog this week.

During the course of this week, a number of high-profile websites (like LinkedIn and last.fm) have disclosed possible password leaks from their databases. The suspected leaks put huge amounts of important, private user data at risk.

What's common to both these cases is the weak security they employed to "safekeep" their users' login credentials. In the case of LinkedIn, it is alleged that an unsalted SHA-1 hash was used, in the case of last.fm, the technology used is, allegedly, an even worse, unsalted MD5 hash.

Neither of the two technologies is following any sort of modern industry standard and, if they were in fact used by these companies in this fashion, exhibit a gross disregard for the protection of user data. Let's take a look at the most obvious mistakes our protagonists made here, and then we'll discuss the password hashing standards that Mozilla web projects routinely apply in order to mitigate these risks. <!--more-->

A trivial no-no: Plain-text passwords

This one's easy: Nobody should store plain-text passwords in a database. If you do, and someone steals the data through any sort of security hole, they've got all your user's plain text passwords. (That a bunch of companies still do that should make you scream and run the other way whenever you encounter it.) Our two protagonists above know that too, so they remembered that they read something about hashing somewhere at some point. "Hey, this makes our passwords look different! I am sure it's secure! Let's do it!"Poor: Straight hashing

Smart mathematicians came up with something called a hashing function or "one-way function"

Smart mathematicians came up with something called a hashing function or "one-way function" H: password -> H(password). MD5 and SHA-1 mentioned above are examples of those. The idea is that you give this function an input (the password), and it gives you back a "hash value". It is easy to calculate this hash value when you have the original input, but prohibitively hard to do the opposite. So we create the hash value of all passwords, and only store that. If someone steals the database, they will only have the hashes, not the passwords. And because those are hard or impossible to calculate from the hashes, the stolen data is useless.

"Great!" But wait, there's a catch. For starters, people pick poor passwords. Write this one in stone, as it'll be true as long as passwords exist. So a smart attacker can start with a copy of Merriam-Webster, throw in a few numbers here and there, calculate the hashes for all those words (remember, it's easy and fast) and start comparing those hashes against the database they just stole. Because your password was "cheesecake1", they just guessed it. Whoops! To add insult to injury, they just guessed everyone's password who also used the same phrase, because the hashes for the same password are the same for every user.

Worse yet, you can actually buy(!) precomputed lists of straight hashes (called Rainbow Tables) for alphanumeric passwords up to about 10 characters in length. Thought "FhTsfdl31a" was a safe password? Think again.

This attack is called an offline dictionary attack and is well-known to the security community.

Even passwords taste better with salt

The standard way to deal with this is by adding a per-user salt. That's a long, random string added to the password at hashing time:

The standard way to deal with this is by adding a per-user salt. That's a long, random string added to the password at hashing time: H: password -> H(password + salt). You then store salt and hash in the database, making the hash different for every user, even if they happen to use the same password. In addition, the smart attacker cannot pre-compute the hashes anymore, because they don't know your salt. So after stealing the data, they'll have to try every possible password for every possible user, using each user's personal salt value.

Great! I mean it, if you use this method, you're already scores better than our protagonists.

The 21st century: Slow hashes

But alas, there's another catch: Generic hash functions like MD5 and SHA-1 are built to be fast. And because computers keep getting faster, millions of hashes can be calculated very very quickly, making a brute-force attack even of salted passwords more and more feasible.

So here's what we do at Mozilla: Our WebApp Security team performed some research and set forth a set of secure coding guidelines (they are public, go check them out, I'll wait). These guidelines suggest the use of HMAC + bcrypt as a reasonably secure password storage method.

The hashing function has two steps. First, the password is hashed with an algorithm called HMAC, together with a local salt: H: password -> HMAC(local_salt + password). The local salt is a random value that is stored only on the server, never in the database. Why is this good? If an attacker steals one of our password databases, they would need to also separately attack one of our web servers to get file access in order to discover this local salt value. If they don't manage to pull off two successful attacks, their stolen data is largely useless.

As a second step, this hashed value (or strengthened password, as some call it) is then hashed again with a slow hashing function called bcrypt. The key point here is slow. Unlike general-purpose hash functions, bcrypt intentionally takes a relatively long time to be calculated. Unless an attacker has millions of years to spend, they won't be able to try out a whole lot of passwords after they steal a password database. Plus, bcrypt hashes are also salted, so no two bcrypt hashes of the same password look the same.

So the whole function looks like: H: password -> bcrypt(HMAC(password, localsalt), bcryptsalt).

We wrote a reference implementation for this for Django: django-sha2. Like all Mozilla projects, it is open source, and you are more than welcome to study, use, and contribute to it!

What about Mozilla Persona?

Funny you should mention it. Mozilla Persona (née BrowserID) is a new way for people to log in. Persona is the password specialist, and takes the burden/risk away from sites for having to worry about passwords altogether. Read more about Mozilla Persona.

So you think you're cool and can't be cracked? Challenge accepted!

Make no mistake: just like everybody else, we're not invincible at Mozilla. But because we actually take our users' data seriously, we take precautions like this to mitigate the effects of an attack, even in the unfortunate event of a successful security breach in one of our systems.

If you're responsible for user data, so should you.

If you'd like to discuss this post, please leave a comment at the Mozilla Webdev blog. Thanks!

Fun with ebtables: Routing IPTV packets on a home network

In my home network, I use IPv4 addresses out of the 10.x.y.z/8 private IP block. After AT&T U-Verse contacted me multiple times to make me reconfigure my network so they can establish a large-scale NAT and give me a private IP address rather than a public one (this might be material for a whole separate post), I reluctantly switched ISPs and now have Comcast. I did, however, keep AT&T for television. Now, U-Verse is an IPTV provider, so I had to put the two services (Internet and IPTV) onto the same wire, which as it turned out was not as easy as it sounds. <!--more-->

tl;dr: This is a "war story" more than a crisp tutorial. If you really just want to see the ebtables rules I ended up using, scroll all the way to the end.

IPTV uses IP Multicast, a technology that allows a single data stream to be sent to a number of devices at the same time. If your AT&T-provided router is the centerpiece of your network, this works well: The router is intelligent enough to determine which one or more receivers (and on what LAN port) want to receive the data stream, and it only sends data to that device (and on that wire).

Turns out, my dd-wrt-powered Cisco E2000 router is--out of the box--not that intelligent and, like most consumer devices, will turn such multicast packets simply into broadcast packets. That means, it takes the incoming data stream and delivers it to all attached ports and devices. On a wired network, that's sad, but not too big a deal: Other computers and devices will see these packets, determine they are not addressed to them, and drop the packets automatically.

Once your wifi becomes involved, this is a much bigger problem: The IPTV stream's unwanted packets easily satisfy the wifi capacity and keep any wifi device from doing its job, while it is busy discarding packets. This goes so far as to making it entirely impossible to even connect to the wireless network anymore. Besides: Massive, bogus wireless traffic empties device batteries and fills up the (limited and shared) frequency spectrum for no useful reason.

One solution for this is only to install manageable switches that support IGMP Snooping and thus limit multicast traffic to the relevant ports. I wasn't too keen on replacing a bunch of really expensive new hardware though.

In comes ebtables, part of netfilter (the Linux kernel-level firewall package). First I wrote a simple rule intended to keep all multicast packets (no matter their source) from exiting on the wireless device (eth1, in this case).

ebtables -A FORWARD -o eth1 -d Multicast -j DROPThis works in principle, but has some ugly drawbacks:

-d Multicasttranslates into a destination address pattern that also covers (intentional) broadcast packets (i.e., every broadcast packet is a multicast packet, but not vice versa). These things are important and power DHCP, SMB networking, Bonjour, ... . With a rule like this, none of these services will work anymore on the wifi you were trying to protect.-o eth1keeps us from flooding the wifi, but will do nothing to keep the needless packets sent to wired devices in check. While we're in the business of filtering packets, might as well do that too.

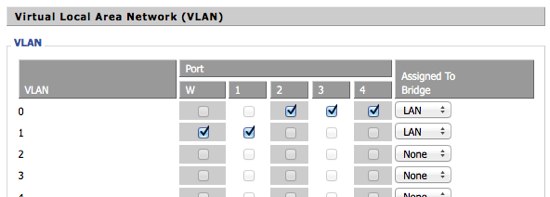

So let's create a new VLAN in the dd-wrt settings that only contains the incoming port (here: W) and the IPTV receiver's port (here: 1). We bridge it to the same network, because the incoming port is not only the source of IPTV, but also our connection to the Internet, so the remaining ports need to be able to connect to it still.

Then we tweak our filters:

ebtables -A FORWARD -d Broadcast -j ACCEPT

ebtables -A FORWARD -p ipv4 --ip-src ! 10.0.0.0/24 -o ! vlan1 -d Multicast -j DROPThis first accepts all broadcast packets (which it would do by default anyway, if it wasn't for our multicast rule), then any other multicast packets are dropped if their output device is not vlan1, and their source IP address is not local.

With this modified rule, we make sure that any internal applications can still function properly, while we tightly restrict where external multicast packets flow.

That was easy, wasn't it!

Some illustrations courtesy of Wikipedia.