With the Subversion VCS, one way to import external modules or libraries into a code tree is by defining the svn:externals property of your repository. Subversion will then check out the specified revision (or the latest revision) of the other repository into your source tree when checking out your code.

Submodules are basically the same thing in the "git" world.

And since git can talk to subversion repositories with git svn, we should be able to specify a third-party SVN repository as a submodule in our own git repository, right? Sadly the answer is currently: No.

Here is a workaround that I have been using to at least achieve a similar effect, while keeping both SVN and git happy. This assumes that you have a local git repository that is also available somewhere else "up-stream" (such as github), and you want to import an external SVN repository into your source tree.

1: Add a tracking branch for SVN

Add a section referring to your desired SVN repository to your .git/config file:

(...)

[svn-remote "product-details"]

url = http://svn.mozilla.org/libs

fetch = product-details:refs/remotes/product-details-svn

Note that in the fetch line, the part before the colon refers to the branch you want to check out of SVN (for example: trunk), and the part after that will be our local remote branch location, i.e. product-details-svn will be our remote branch name.

Now, fetch the remote data from SVN, specifying a revision range unless you want to check out the entire history of that repository:

git svn fetch product-details -r59506:HEAD

git will check out the remote branch.

2: clone the tracking branch locally

Now we have a checked-out SVN tracking branch, but to use it as a submodule, we must make a real git repository from it -- a branch of our current repository will keep everything in one place and work as well. So let's check out the tracking branch into a new branch:

git checkout -b product-details-git product-details-svn

As git status can confirm, you'll now have (at least) two branches: master and product-details-git.

3: Import the branch as a submodule

Now let's make the new branch available upstream:

git push --all

After that's been pushed, we can import the new branch as a submodule where we want it in the tree:

git checkout master

git submodule add -b product-details-git ../reponame.git my/submodules/dir/product-details

Note that ../reponame.git refers to the up-stream repository's name, and -b ... defines the name of the branch we've created earlier. Git will check out your remote repository and point to the right branch automatically.

Don't forget to git commit and you're done!

Updating from SVN

Updating the "external" from SVN is unfortunately a tedious three-step process :(. First, fetch changes from SVN:

git svn fetch product-details

Second, merge these changes into your local git branch and push the changes up-stream:

git checkout product-details-git

git merge product-details-svn

git push origin HEAD

And finally, update the submodule and "pin it" at the newer revision:

git checkout master

cd my/submodules/dir/product-details

git pull origin product-details-git

cd ..

git add product-details

git commit -m 'updating product details'

Improvements?

This post is as much a set of instructions as it is a call for improvements. If you have an easier way to do this, or if you know how to speed up or simplify any of this, a comment to this post would be very much appreciated!

Read more…

Let me outline some of the highlights:

Let me outline some of the highlights: I also went to some presentations that affect my work on the Mozilla project slightly less:

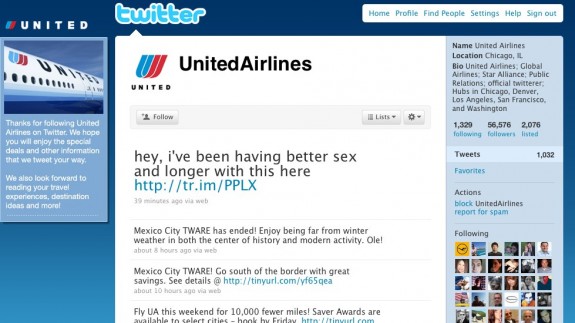

I also went to some presentations that affect my work on the Mozilla project slightly less: [/caption]Over the recent weeks I've got frequent blog spam along the lines of:

[/caption]Over the recent weeks I've got frequent blog spam along the lines of: